- Introduction

- User Behaviors: Turning Tools into Experts

- Product Development: Real-Time Discovery Loops

- Example: Customer Support Assistant

- Gen 0: Live chat & Knowledge base search

- Gen 1: LLM Agentic workflows

- Gen 2: Goal-oriented Agentic systems

- Conclusion

Introduction

While LLMs astound both builders and observers with their climbing benchmarks, falling costs, and expanding reasoning abilities, their true impact on everyday software is only beginning to emerge.

The mainstream breakthrough of ChatGPT – becoming the fastest-growing consumer product in history – sparked both widespread public fascination, user adoption and an industry gold rush.

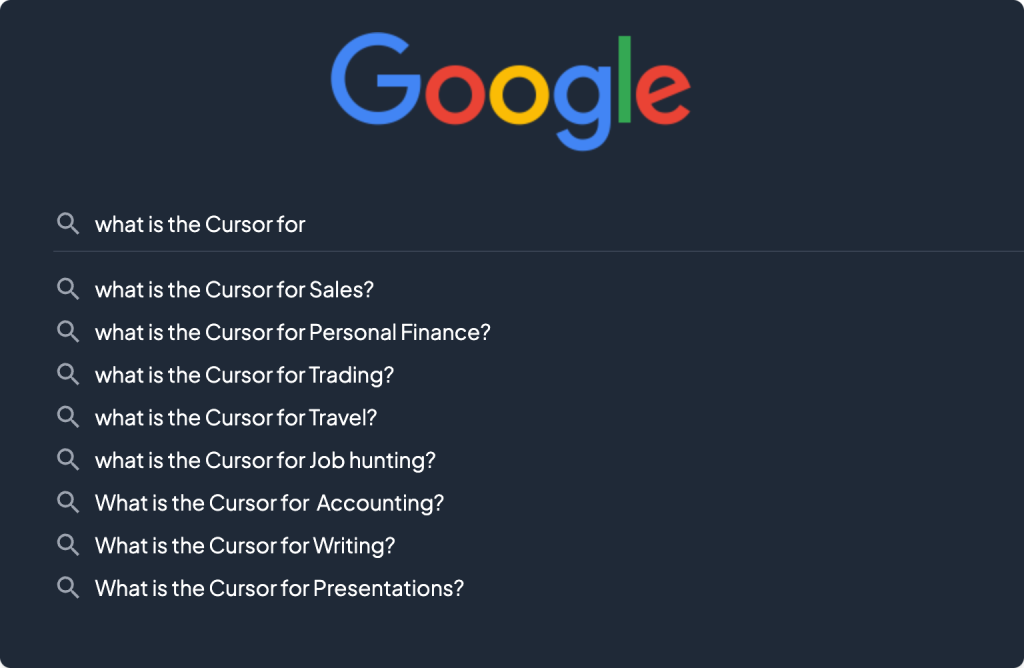

Fast forward to today, users are fundamentally changing how they search (Perplexity), think (Deepseek), and work (Cursor), as evidenced by the impressive daily active user counts and retention rates of leading AI assistants (ChatGPT, Claude, Gemini, Grok,…).

Across all software interfaces, users now approach the <input-box> with entirely new expectations – a shift that could transform entire industries.

This piece draws from our research into code-first adaptable AI systems and their profound implications in changing user behaviors, product development, and the broader software market.

User Behaviors: Turning Tools into Experts

Where have we seen this before?

Google’s search box was able to capture people’s imagination by placing “the world’s information” at our fingertip. Google acquired the identity of the “expert” you go to settle a dispute among friends over a fact, to search for a venue or learn more about a competitor.

In fact, Google’s identity became so ingrained in our culture that it ended up in the Oxford English Dictionary by 2006.

This is the power of transitioning from a tool to a domain expert, in your user’s mind.

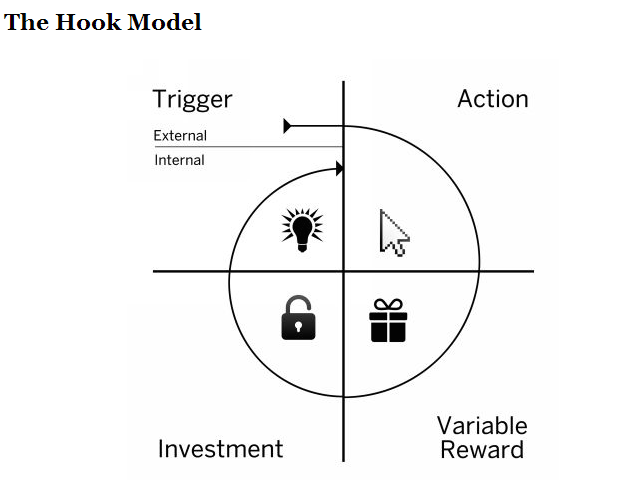

Outcome based products like Google Search are different:

- they offer low friction action, often using unstructured inputs

- they offer variable rewards (some results are truly amazing, some a bit less so)

- and often require (and/or reward) some investments from the user, such as extra effort for personalization or some acquired skills, to improve search results.

- Once these products succeed in forming a habit loop – they attach themselves firmly to external/internal triggers, i.e. users will know when to <fill the product>, in our case “Google”

From Hooked, by Nir Eyal (2013)

Now where is this analogy going?

These product experiences were previously limited to a few companies with sufficient data, infrastructure, and ML expertise to form these loops. However, with LLMs and the widespread adoption of ML techniques, this is no longer the case.

We think the same building blocks used to create these early category defining flywheels are now finally within reach to all builders.

In fact, we speculate that soon Users will come to expect goal-oriented experiences from most software they interact with.

The “magic input box”, the expectation that your goal/intent can be solved within the domain you are associating to the tool you are using, will become table stakes in the future for many core applications.

Many products that today lead their vertical will need to race toward “expert” status to avoid being leapfrogged by competitors and see their customers churn.

Soon, having a “dumb” support assistant that merely guides users through predefined workflows (even if using LLM & RAG to navigate options) will make you appear outdated when compared to goal-oriented systems.

Example:

A "dumb" assistant might respond to "Help me reduce expenses" by walking through a predefined menu of budget tracking features or articles in your knowledge base. In contrast, a goal-oriented expert would say "I notice you're spending 40% of your budget on SaaS tools - I can analyze usage patterns, identify redundant subscriptions, and create an optimization plan to reduce costs by 25% this quarter. Should we start there?"Product Development: Real-Time Discovery Loops

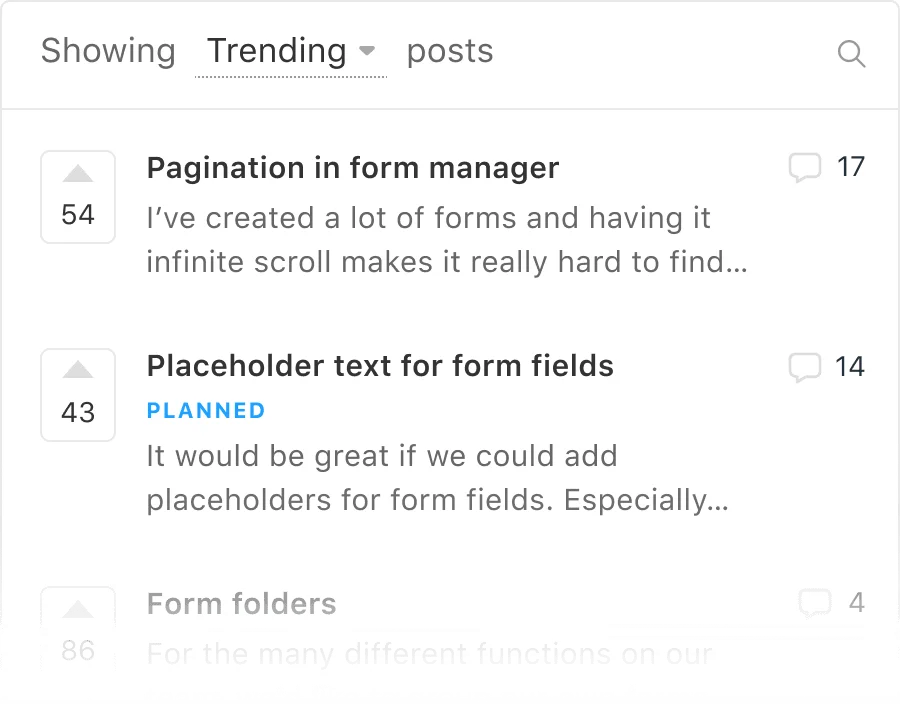

Goal-oriented systems will not only leapfrog traditional systems driven by the shift in user expectations, but also through their ability to iteratively build their product with their end users, in real time.

Imagine an dynamic system that can continuously adapt based on each user’s usage or their direct requests, making product discovery – the process of refining a product around users pain points/needs – a continuous reinforcement loop. This will fundamentally revolutionize the speed at which software can adjust to user needs.

💡 Imagine:

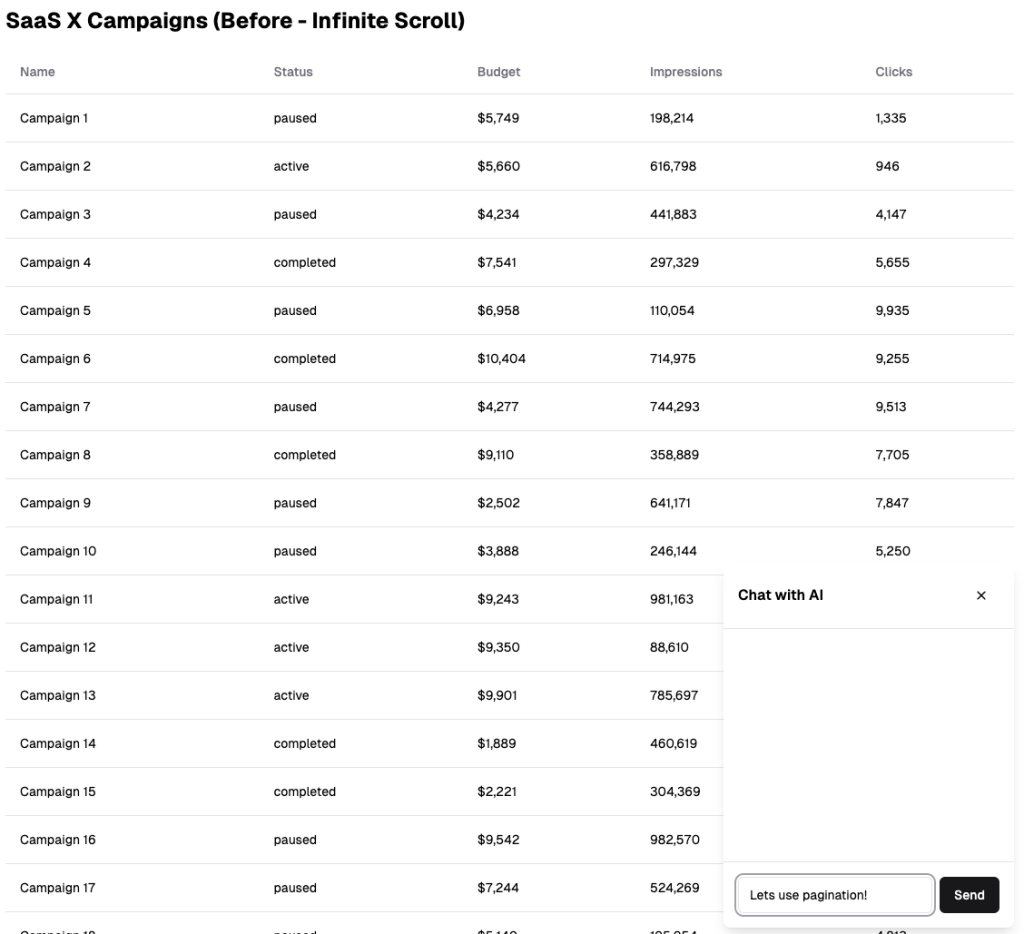

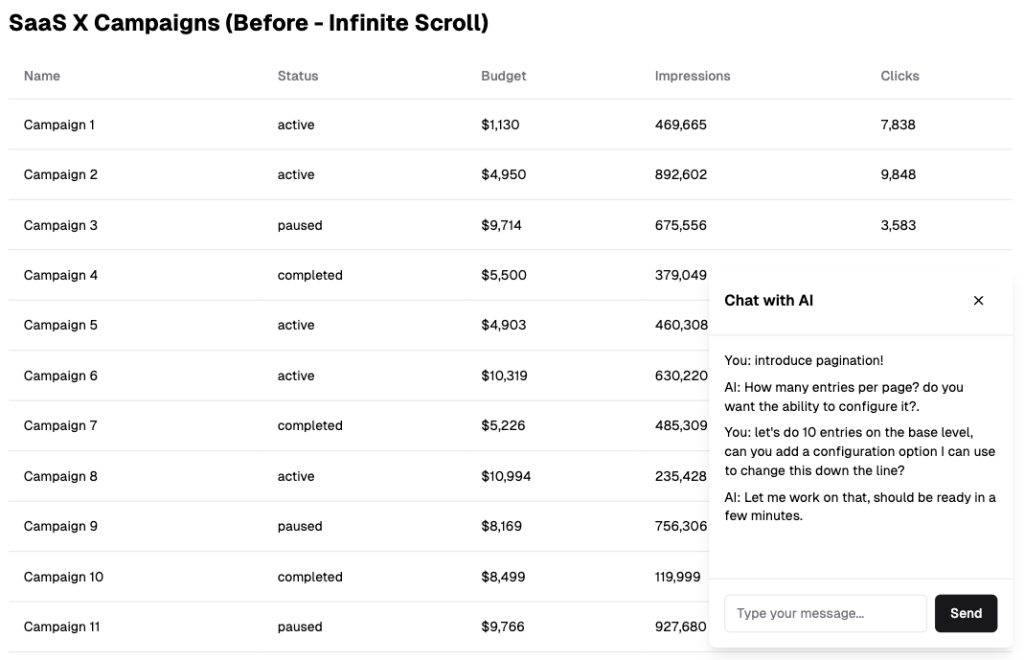

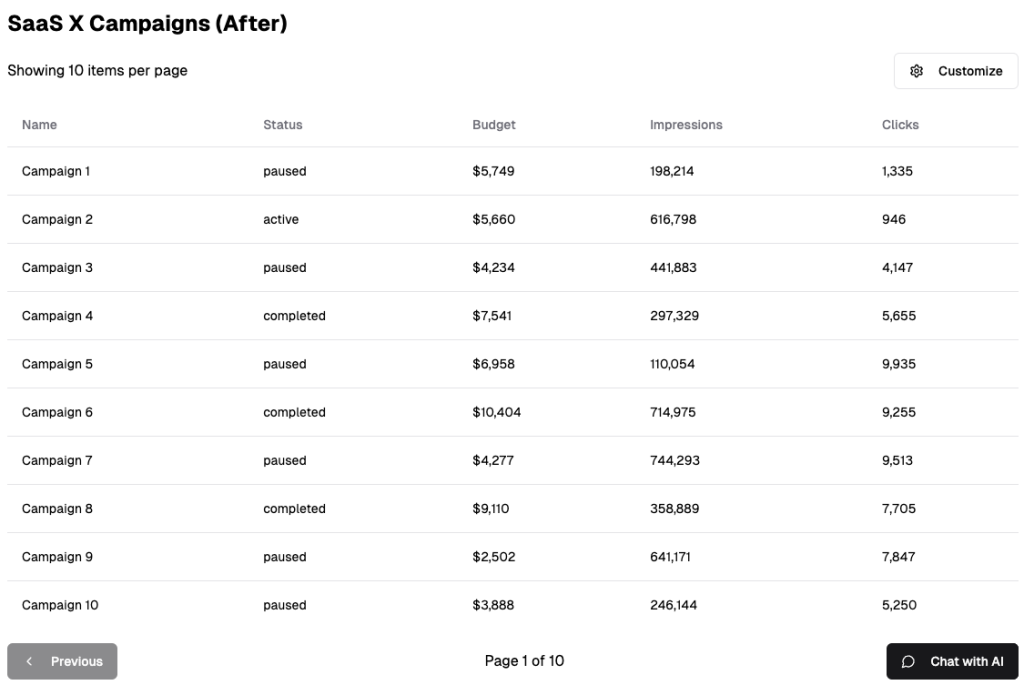

You're a power user of SaaS X, a marketing campaign software you rely on. As your campaign volume grows, the product slows down and becomes difficult to navigate. You crave more control, like the ability to adjust pagination size. Currently, you're stuck submitting product requests or upvoting existing ones, hoping for enough support. In the future, you'll simply ask <the magic input box> of the app you are using to "adjust the layout" and expect instant results.Linear Feedback Loop

Real Time Feedback Loop

Example from Canny.io where users can submit feature request & vote it to be picked up in future releases

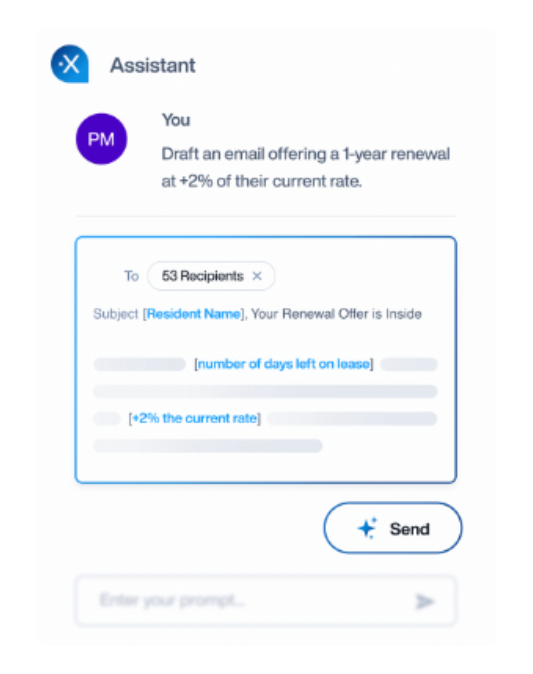

Example of “intent” based feedback, the user is conditioned to expect the ability to change the results & directly prompts the Agent for it

This is a key benefit of the flexibility of goal-oriented products, the user is conditioned to own the system through the <input box> and will volunteer both requirements and feedback to its implementation in real time to make their life better.

This seems small but you will be able to have far more signals from the usage of your product and what product your users actually would want to use.

For developers, imagine how many more experiments you can run by looking at product discovery as continuous reinforcement loop, for users, imagine how great would it be if your product would adjust in real time to your requests.

This shift could be as significant as the transition from self-hosted software to SaaS, where companies gained a better chance of finding product-market fit through rapid experimentation enabled by faster customer feedback loops.

Example: Customer Support Assistant

A canonical example that is often used to exemplify the integration of AI among existing SaaS is the “Customer Chat Bot” or assistant.

Gen 0: Live chat & Knowledge base search

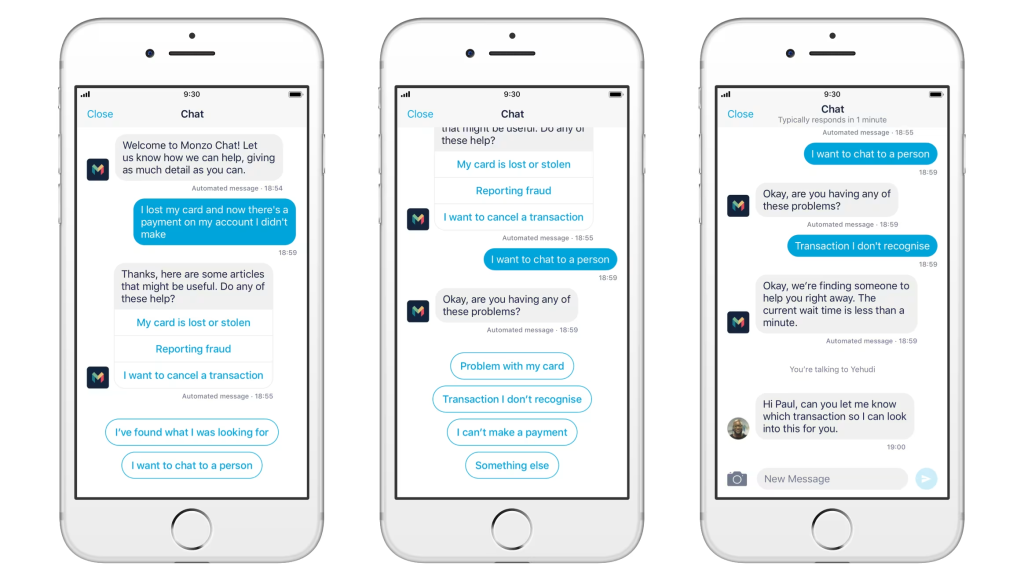

In their early form of this idea, these were widgets that exposed a combination of live chats (to a support team) and knowledge base searches (fuzzy or similarity based). These are often simple omni present call-to-action widgets to ensure that customers from within the app experience could reach for support whenever they wanted.

Below an example of the “Chat widget” of Monzo, known in the UK for being consistently one of the first bank in customer satisfaction.

🐢 Limitations:

- These are the “SaaS” equivalent to support@company.com offering a faster (than email) response time, simple retrieval from a knowledge base (often a very formulaic) or expensive way of addressing user queries through on call support staff.

- However, a user doesn’t think “let me talk with Monzo” but reaches for this only when they genuinely ran into an issue, as they equate this to customer support. In short, this isn’t yet a magic input box.

Gen 1: LLM Agentic workflows

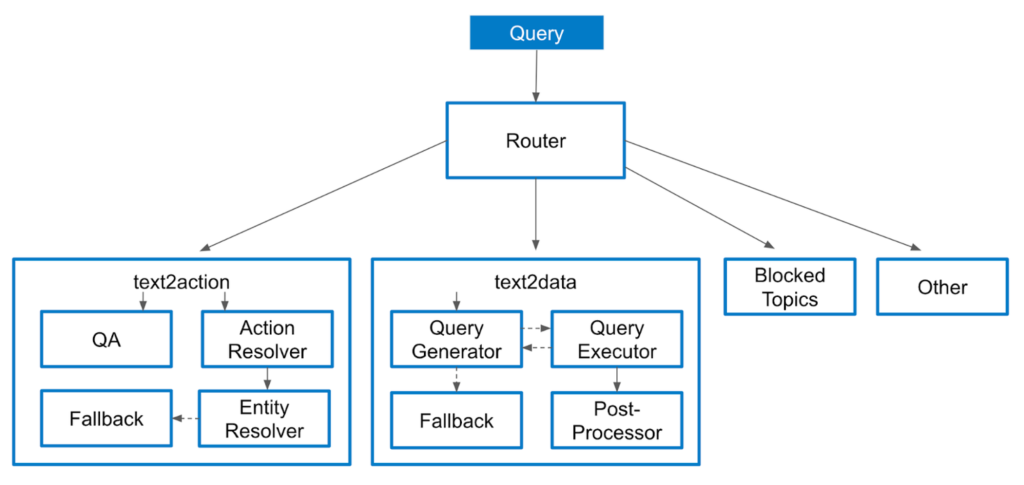

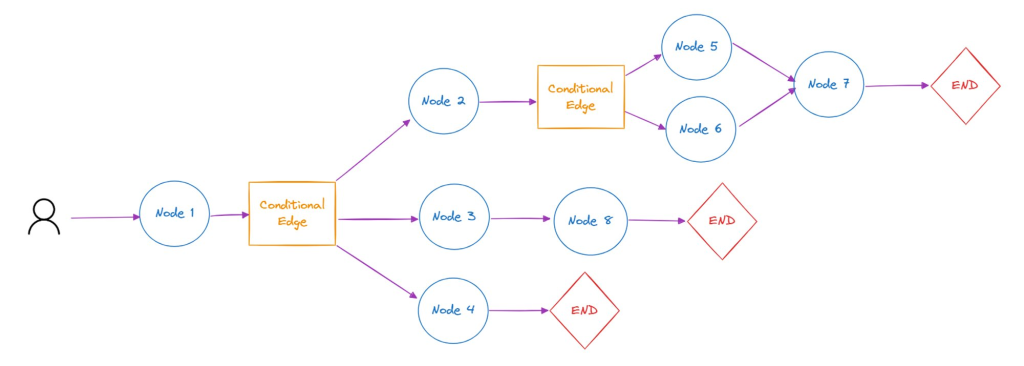

Example of a generic agentic customer bot

A typically 2024 customer agent design appear more as a pipeline, where incoming user queries are routed to specific pre-defined agentic workflows: often one set handles direct actions (“text2action”) and another handles data retrieval (“text2data”).

Each workflow is meticulously orchestrated with known tools, fallback strategies, dynamic few shots from a pool of manually curated examples and triggers for collecting feedback and improving performance. This ensures predictable, reliable outcomes based on well-tested logic.

🐢 Limitations:

- These are early LLM workflows that aren’t truly goal-oriented systems—they use hardcoded paths (with some agency from the LLM to pick which route) to patch current model limitations, but are likely to be supplanted by more advanced flows as model progress.

- The limited range of predefined workflows prevents them from being perceived as true “experts” in their domain, and thus this isn’t yet a “magic input box”

Gen 2: Goal-oriented Agentic systems

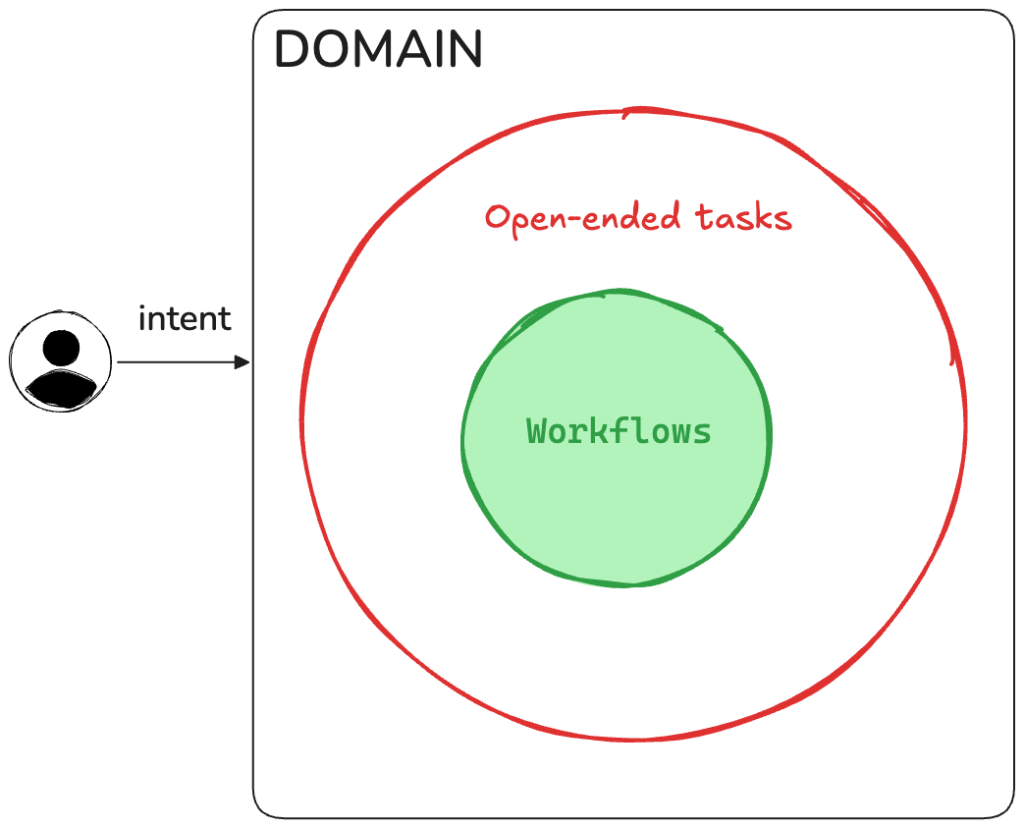

An expert system should handle two types of tasks: defined tasks with established paths and predictable results, and open-ended tasks where the solution emerges through observation, reasoning, and context.

The user should view your solution as the domain expert, and this should come through re-affirming “rewards”, proving its worth through challenging tasks.

For a customer support agent, open-ended problems should cover everything within your domain that isn’t handled by existing hardcoded paths—using a disallow list instead of an allow list to define boundaries.

The functionality must align with your product’s target domain of expertise. The system should handle both sensitive actions (like writes and private data access) and basic operations (like public data reads) across your tools and datasets, as shown earlier.

For a banking application like Monzo, which is the “Your Financial Operating System” intents that you might allow might look like this:

| Category | Example Request |

|---|---|

| Notifications & Reminders | “I want to be notified every time a new subscription reaches six months. And I want you to ask me, do you still want it?” |

| Transaction Filtering & Analysis | “I want to filter my feed in transactions that pertain household versus other transactions based on the categories.” |

| Transaction Filtering & Analysis | “I want to generate a report for my taxes that includes all capital gain transactions, including purchase time, value, sell time, value, and a projected capital gain” |

| Financial Calculations & Comparisons | “I want to find the best interest rate for my mortgage, given the current loan to equity value.” |

| Information Retrieval & Explanation | “I saw the markets crashing today after the announcement of DeepSeek. What could that be?” |

| Automated Actions & Tracking | “I want to put aside a dollar every time I post a tweet saying AI on X, and I want to put those money aside in a saving pot which I contribute monthly to my preferred charity.” |

These features could eventually become built into the app, but expert products can refine workflows with users by leveraging their creative problem-solving attempts. Whether to give a feature a “pixel real estate” in the product main experience becomes a decision that can be customized for different users, creating truly personalized software.

The most popular features could be incorporated into the initial product product experience with clear calls-to-action. This helps users who might be hesitant to request features directly through the magic input box discover new capabilities.

As you successfully handle more user requests, your system will expand its expertise and naturally evolve into the go-to solution for your domain.

Conclusion

This is the time to build. Every vertical is up for grabs for those who can harness the shift in customer expectations and become the experts of their own domain. By building with adaptability first, we think think the “magic input box” (as an analogy for adaptability) can give you the escape velocity to compete in the next platform shift. We believe the next generation of agentic systems is a completely new paradigm—where agents continuously learn, adapt, and evolve using code—allowing developers to reimagine how software is built and consumed. We’re excited to share more about what we’ve been researching and building to give developers the tools to embed their own “magic input box” right into existing or brand-new applications. If you’re ready or curious to embrace this shift, do reach out to get a sneak peak of what we are building!