In the evolving landscape of blockchain technology, the Polkadot ecosystem has emerged as a beacon of interoperability and scalability, representing one of the biggest bets on the multichain paradigm. In this network of interlinked and cooperative parachains—the term Polkadot uses for its connected blockchains—StorageHub seeks to fulfil a common shortfall in blockchain technology: storage capacity. Designed to address the pressing need for efficient, scalable, secure and decentralised storage, StorageHub stands as a testament to innovation and strategic foresight.

On our last visit to the state of StorageHub, we went through the first steps of the design: finding the scope and use-cases, identifying the actors in the system, their interactions, and the incentives to motivate their actions. This article delves deeper into the technical design behind StorageHub, unravelling the intricate process that aims to redefine data storage within the Polkadot network.

Before We Continue

This article contains many technical and ecosystem-specific terms that might be confusing for the newcomers. That is why it is best to get some definitions out of the way, before delving deeper into the tech. Here’s a glossary of terms to aid in understanding:

- Parachain: A specialised blockchain integrated within the Polkadot network, designed to achieve specific functionality while benefiting from Polkadot’s security and interoperability.

- Substrate: A modular framework for building blockchains, enabling developers to create customised blockchains tailored to specific needs.

- FRAME (Framework for Runtime Aggregation of Modularised Entities): A set of modules (or pallets) used for developing Substrate blockchains, providing the necessary building blocks for runtime development.

- Runtime: Compiled code in WASM that specifies the rules for the state transition function of a parachain. In simplified terms, a runtime is a list of transactions that a user can execute for a given chain.

- MSP (Main Storage Providers): One of the two kinds of Storage Providers in StorageHub. They are meant to provide good value propositions for data retrieval, and are freely chosen by users based on that proposition.

- BSP (Backup Storage Providers): The other kind of Storage Providers in StorageHub. Their goal is to provide data redundancy and unstoppability, meaning that no app or user relaying on data stored in StorageHub should be vulnerable to a single point of failure. BSPs are what assures that users can always change to another MSP, if they desire to do so.

- XCM (Cross-Consensus Messaging): A protocol in Polkadot for sending messages and executing actions across different blockchains (parachains).

- Merkle Patricia Trie: A data structure used in blockchain for securely and efficiently managing and verifying large datasets.

- Fingerprint (in the context of this article): a cryptographic output that uniquely identifies the contents of a file, like a hash or a Merkle root.

Design Goals

The design of StorageHub is underpinned by several core objectives, each aimed at addressing the fundamental challenges of decentralised storage within the Polkadot ecosystem:

- Unstoppability: No application drawing from StorageHub’s data should be vulnerable to a single point of failure within StorageHub. This often implies decentralisation to prevent that single point of failure, but it is not the only characteristic that should be taken into account, as it could conflict with the next objective…

- User simplicity: Storing, and most importantly accessing the data stored in StorageHub, should be hassle-free.

- Reliability: Users should be at ease that data stored in this system will not be taken down or made unavailable.

- Flexibility: The design should be able to cater a wide range of use-cases, even those that do not exist today.

- Efficiency: Ensuring fast, cost-effective storage and retrieval processes, minimising the resources required for operations.

- Scalability: To handle an increasing amount of data and transactions without compromising performance, ensuring the system can grow with user demands.

These goals inform the technical choices and architecture of StorageHub, ensuring it is well-equipped to serve as a foundational component of the Polkadot ecosystem.

Technical Design

The development of StorageHub involved outlining overarching modules and examining critical elements within these modules. Starting from an overview of the system, they initially delineate the overarching modules that constitute StorageHub. Down the line, the focus narrows down to examine critical elements within these modules, specifically highlighting the runtime architecture, strategies for optimising on-chain storage, and the alternatives for storage proofs.

StroageHub Modules

Designing the requirements for all the modules of such a complicated system is not an easy feat. To guide our process, we wrote down the most basic actions that the actors in the system would want to make, in the form of user stories. Although they are not strictly limited to users, as there are more actors in this network, like Storage Providers.

We considered the following actions:

- User writes a file to StorageHub.

- User overwrites a file to StorageHub.

- User changes Main Storage Provider.

- User reads file from Main Storage Provider.

- User updates permissions for reading a file it owns.

- User updates permissions for writing a file it owns.

- User “deletes” file (stops paying for its storage).

- Main Storage Provider advertises its service in StorageHub.

- Main Storage Provider delists its services from StorageHub.

- Backup Storage Provider signs up to provide storage capacity to StorageHub.

- Backup Storage Provider removes itself from StorageHub.

- Storage Provider increases its stake to provide more storage capacity to StorageHub.

The next step was to think of what modules of the design would be involved in each action, and what would be needed of them to allow for that action to happen, in a secure and efficient manner. Let’s take “User writes a file to StorageHub” as an example. The diagram below shows the steps and actors of the system that take part in this user story.

Here we identify 5 actors as part of this interaction:

- User

- Alien parachain (any other Polkadot parachain that is not StorageHub)

- StorageHub Parachain

- MSPs

- BSPs

We can identify some modules that are strictly necessary for this, and some that would greatly benefit the user/developer experience, but are not mandatory. These are the modules, followed by a brief description of them:

Mandatory Modules

- File Transfer Module (p2p): A Rust-based asynchronous module using libp2p for peer-to-peer file transfers between alien parachain nodes and Storage Providers, handling sending and receiving files with robust connection management and monitoring on-chain StorageHub activity.

- StorageHub Light Client: A lightweight client for interfacing with the StorageHub parachain, capable of synchronising with the latest blocks, verifying transactions, filtering events, and managing transaction signing and sending.

Optional Modules

- Pallet for Submitting Storage Requests: A pallet that simplifies the XCM message construction for submitting storage requests to StorageHub, enhancing the developer experience and reducing the risk of bugs due to message structure changes.

- Client Running Merkle Tree Generator: A web-friendly package providing tools and functions to correctly hash and Merklised files before sending, ensuring consistency in the file storage process and abstracting complex details for dApp developers.

- Library for Separating File into Chunks: A library aimed at alien node operators, handling the separation of files into chunks, applying Erasure Coding, and computing fingerprints, thereby ensuring reliability and trust in the StorageHub’s file handling process.

- Library for Adding RPC Method to Node: A library designed to help parachain node developers integrate an RPC method that manages file uploads and transaction handling with StorageHub, providing a standardised or recommended approach to enhance compatibility and ease of use.

Following the procedure used for the “writing a file”, we followed this for the rest of the user stories, meticulously identifying mandatory and optional modules, as well as what those would have to offer in their functionalities.

StorageHub Runtime

Naturally a module that is central to all of these interactions is the parachain itself. Or to be more specific, StorageHub’s runtime, which dictates the kind of transactions that are valid to execute in StorageHub. Given the complexity of these modules alone, it deserved its own study.

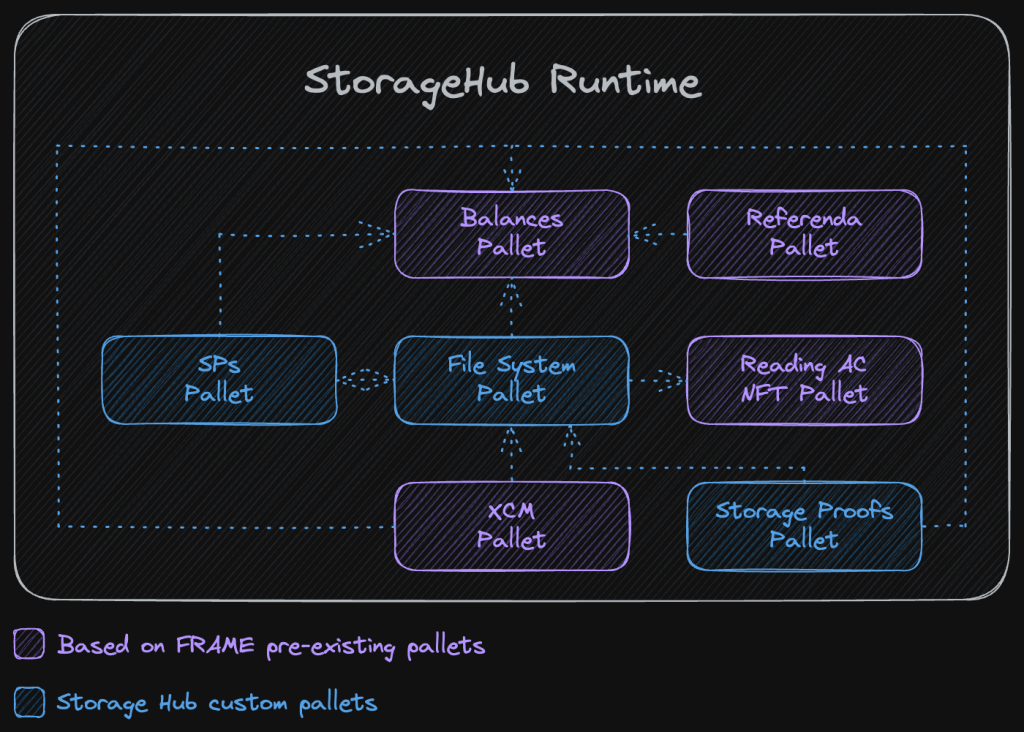

StorageHub’s runtime is a Substrate-based runtime, and like many of those, it uses FRAME’s pallets as building blocks. Below we show an image of some of the most important pallets that compose StorageHub’s runtime, and their interconnections. This selection, although not extensive, is good enough to highlight the pallets that serve StorageHub’s unique purpose.

Each of these pallets was thoroughly analysed, both in their inner workings, as well as with what kind of interface they would expose. Taking the File System Pallet as an example, at the heart of StorageHub, several questions had to be answered.

- How to assign BSPs to a new storage request?

- How to delete files from StorageHub?

- How to clean up on-chain storage?

We designed an assignment algorithm that would offload the computational burden of actually deciding which file goes to who, to the BSPs themselves. At the same time, some randomness component would ensure fair distribution among Providers, and a time component to this random criteria, would allow -eventually- any Provider to volunteer. The criteria would take the form of a calculation that each BSP would compute, to see whether or not it qualified for storing a given file. The result of that computation would have to be below a threshold, but that threshold would relax over time.

Additionally, the minimum starting threshold would have to be variable with the number of BSPs in the network.

Keeping records of all the files stored in StorageHub is also one of the responsibilities of the File System Pallet, and it proved to be a very challenging design as well. On the one hand, you need the runtime to have some sort of information about files being stored in the network, to request proofs and manage payments. On the other hand, keeping records on a per-file basis means that the on-chain storage would grow with the number of files stored. On-chain storage is a limiting factor when it comes to blockchains. After all, that is the whole reason for StorageHub’s existence. For StorageHub to be successful it should scale properly and adapt to all the storage needs the Polkadot ecosystem will demand of it, so we came up with some clever cryptographic ways of avoiding per-file records on-chain, while keeping the same guarantees as if we had them.

The approach is based on what we call Merkle Patricia Forests, which are no other than Merkle Patricia Tries of Merkle Patricia Tries. The root of a Trie represents a file, and the root of a forest represents all the files being stored by a single Storage Provider. This root of roots, is the only information that needs to be stored on-chain, and it works as a cryptographic “summary” of everything stored by that Provider. Naturally this brings along several complexities related to the fact that the runtime doesn’t actually know what belongs to that root, it can only check proof against it. However, these complexities could be overcome with cryptography principles and creative engineering, and the benefits greatly justify this added effort.

Yet another crucial pallet is the one in charge of storage proofs, or to be correct, storage-time proofs. A Storage Provider should be required to prove that it not only keeps the files they committed to store in its hot storage, but also that it is storing them over time, and not only at the specific moment in which it is being asked to prove it.

Three different cryptographic algorithms for implementing storage proofs were discussed, which are cryptographic results demonstrating that a Storage Provider keeps storing a specific file. The algorithms compared were:

- ePoSt

- Filecoin’s Proof of Replication and Proof of Spacetime (PoRep and PoSt)

- Merklised Files approach our team produced

Storage proofs are essential to ensure that Storage Providers, including MSPs and BSPs, securely store files. The ideal storage proof algorithm should be computationally cheap, require no dedicated or expensive hardware (especially for BSPs), allow on-chain verification without accessing the full file data, fit within a regular Substrate extrinsic, and prevent SPs from only partially storing a file. Let’s take a deeper look at each of the algorithms we compared:

ePoSt

ePoSt (Practical and Client-Friendly Proof of Storage-Time) is a state-of-the-art protocol for proving continuous data storage over time. It uses a series of challenges that the SP must correctly respond to, proving they’ve been storing the file continuously. It is a sophisticated and elegant protocol but complex, without much real-world testing, and with many parts of it specifically optimised for the use case it was designed for.

PoRep and PoSt

Filecoin’s approach uses PoRep for creating a unique representation of the file stored by an SP and PoSt for the SP to prove continuous storage. It is a market-tested, robust approach with advanced cryptographic components but requires significant computational resources and dedicated hardware, which conflicts with the requirement for BSPs to run on cheap infrastructure.

Merklised Files

This approach involves breaking down a file into small chunks, creating a Merkle Patricia Trie, and storing the root on-chain. The runtime challenges SPs by requesting a proof for a random chunk. It relies on high-frequency challenges and generates many on-chain transactions, but it’s simple to understand and implement, using well-tested cryptographic structures.

After careful consideration, our team concluded that the Merklised Files approach is most suitable for StorageHub, primarily due to its simplicity and compatibility with the design’s principles. While Filecoin’s approach has strong advantages, its requirement for significant computational resources makes it unsuitable for BSPs. ePoSt, despite its novel approach and optimisations, is complex and untested in real-world scenarios. However, ePoSt could be considered in the future as an upgrade to the Merklised Files approach, potentially reducing on-chain transaction traffic while maintaining assurance of continuous file storage.

Roadmap to Reality

Once we were happy with the shape the design was taking, an Implementation plan was laid out to make StorageHub a reality. The roadmap was split into two primary phases, with an overall focus on establishing a robust storage solution over a period of 12 months.

Phase 1: Development (8 months) This phase is dedicated to constructing the foundational components of StorageHub. It involves the development of the StorageHub Runtime, which includes an array of Pallets such as the mentioned File System and Storage Proofs pallets. Additionally, it entails creating the BSP and MSP Clients responsible for file transfer, proof submission, fee charging, and file monitoring tasks. Finally, another significant component is the File Uploader Client, designed to monitor on-chain events and facilitate connections with MSPs and BSPs.

Phase 2: Testing, Audit, and Optimisations (4 months) The second phase focuses on refining the components developed in Phase 1. It includes rigorous testing, integration, and auditing of the StorageHub Runtime and both the BSP and MSP Clients. This phase also involves the development of an MSP example implementation, setting up the infrastructure for its operation, and producing comprehensive documentation covering protocols, hardware specifications, and operational guidelines.

The roadmap is designed to be flexible, accommodating ongoing development, community feedback, and iterative improvements throughout the project’s lifecycle. Intermediate versions of StorageHub will be deployed during the 8-month development phase to facilitate testing and feedback gathering.

Conclusion

In conclusion, StorageHub’s design and implementation plan is a testament to the innovation and strategic foresight in the Polkadot ecosystem. The intricate process of creating a scalable, secure, and efficient storage solution presents several challenges, but the meticulous planning and consideration of StorageHub’s design make it well-equipped to serve as a foundational component in the Polkadot network. As we move forward with the implementation, we are excited to see StorageHub’s potential unfold and contribute to the growth and success of the Polkadot ecosystem.